Welcome to the Adaptive blog!

In this first post we will be talking about Simple Binary Encoder, aka SBE.

Martin Thompson – ex CTO at LMAX, now Real Logic – and Todd Montgomery – ex 29WEST CTO, now Informatica – have been working on a reference implementation for Simple Binary Encoding, a new marshalling standard for low/ultra low latency FIX.

My colleagues and I at Adaptive Consulting have been porting their Java and C++ APIs to .NET.

Why?

You are probably wondering “why are those guys reinventing the wheel? serialization is a solved problem..”

In many cases I would say you are right, but when it comes to low latency, and/or high throughput, systems this can become a limiting factor. Some time ago I built an FX price distribution engine for a major financial institution and managed to get reasonable latencies at 5x throughput of normal market conditions: sub-millisecond between reading a price tick from the wire, processing it, and then publishing, at the 99th percentile. We had to carefully design such a system. one of the major limiting factors was memory pressure causing stop-the-world GCs with resulting latency spikes. To limit GCs you have to limit allocation rates. We reached a point where the main source of allocation was our serialization API and there was not much we could do about it, without major rework. We were using Google Protocol buffers on this particular project.

SBE would have pushed the limit of this system further and offered more predictable latencies.

How it all started

I worked with Martin some time ago and ported the Disruptor to .NET. It was a good project and I had lot of fun, so when he told me a few weeks ago that he was working on some cool stuff with Todd, I immediately offered to join efforts and build a C# version of SBE.

What is SBE?

SBE is at the same time the name of a standard and the API – to make sure you guys get confused 🙂

The standard is defined by the FIX community, or more accurately within this community it is the “High Performance Work Group”, and it specifies:

- what the schema describing your messages should look like, kind of a schema for schema;

- how messages should be encoded on the wire;

- how the API implementing the spec should behave.

As I said SBE is going to be used in FIX for low latency use case in finance but it’s absolutely NOT limited to FIX.

One can define schemas for completely different problem space and use SBE as a general purpose marshalling mechanism.

The toolchain

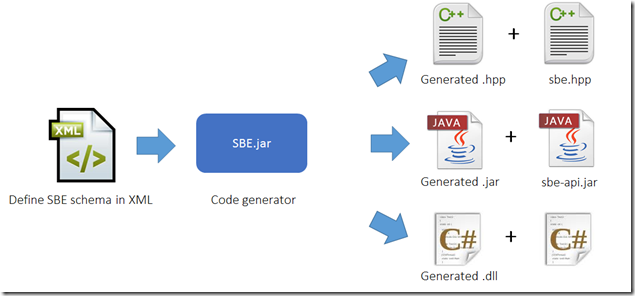

Martin and Todd were asked to create a reference implementation for this spec in Java and C++.

If you are familiar with Google Protocol Buffers the overall process to define your messages is very similar:

- define your schema (XML based, as specified by the standard)

- use SbeTool (a java based utility) to generate encoders and decoders for your messages in Java, C++, and/or C#

- use the generated stubs for the SBE API in your app

Messages

You should find everything you would expect for modelling in the message format:

- all sizes of signed and unsigned integers as primitive types (+ float and double)

- composite structures, reusable across messages,

- enums and bitsets

- repeating blocks, which can be nested

- variable length fields for strings and byte[]

The type system is portable. You can for instance use the Java API to decode messages created by the .NET API (or any combination).

There is also support for optional fields and versioning for backward compatibility, but note that this is still a work in progress, at the specification and API levels.

You said it’s fast?

It’s pretty damn fast… We have written several benchmarks with different messages shapes ranging from a car model to a market data tick and used Google Protocol Buffers as a benchmark. Why Protocol Buffers? Because it is available in all languages we were targeting and it is widely used and adopted. We will likely add benchmarks with other APIs later.

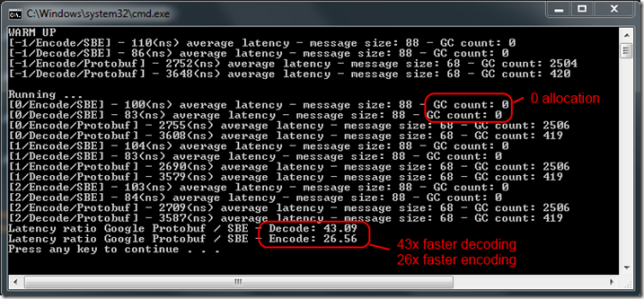

You can have a look and run those benchmarks on your own hardware. This is what I am seeing on my laptop:

- 20-40 million messages encoded or decoded on a single thread, per second

- that’s 30-40ns average latency per message. Let me say it again… 30 to 40 NANO seconds.

- depending on the benchmarks we see a 20 to 50 times throughput increase compared to Google Protocol Buffers.

Also it is worth mentioning that the API does not allocate during encoding and decoding, which means no GC pressure and no resultant GC pauses on this thread and others.

This is a typical run of the .NET implementation, on my 3 year old laptop.

Note that Java and C++ implementations run faster, we still have work to do!

Note: we try to make our benchmark as fair as possible, if you think you could get better performance out of Google Protocol Buffers using a different version of the API or different implementation of the benchmark, please let us know!

What’s coming next?

SBE is only in Beta phase and there is still lots to do but we think it is in a state where you can start giving it a spin: we would love to get your view and feedback, good or bad.

Feel free to post requests or questions in the issue tracker on GitHub

Fork it! Clone it!

You will find the main repository here: https://github.com/real-logic/simple-binary-encoding

Visit the wiki for the documentation: https://github.com/real-logic/simple-binary-encoding/wiki

We have a separate repository for the benchmarks: https://github.com/real-logic/message-codec-bench

If you are after the .NET implementation specifically, you can look at our fork: https://github.com/AdaptiveConsulting/simple-binary-encoding

NuGet package for .NET users

We published a NuGet package for .NET users, it contains:

- SBE.dll, the generated code depends on it

- SbeTool.jar, to generate your encoders and decoders

- a small sample, to quickly get you up and running

https://www.nuget.org/packages/Adaptive.SBE

From Visual Studio, search for “SBE” for pre-release modules (we are still in beta!)

Enjoy!

Reblogged this on Many cores and commented:

Nice to see a strongly performance oriented serialization library. It is even better when it is a standard implementation. Try this!

Pingback: Design principles for SBE, the ultra-low latency marshaling API | Adaptive

Your results look really promising, I will definitely visit your blog regularly.

Looks like a good alternative of fast serialization API. keep it up guys

Great lib and great performance results.

I’ve just run the perf test against Marc Gravell’s protobuf-net implementation and I’ve managed to produce better results than with Jon Skeet’s port of the java version. I’ll send a pull request for the new test. Here are the results:

[0/Encode/SBE] – 191(ns) average latency – message size: 90 – GC count: 0

[0/Decode/SBE] – 97(ns) average latency – message size: 90 – GC count: 0

[0/Encode/Protobuf-net] – 1440(ns) average latency – message size: 70 – GC count: 0

[0/Decode/Protobuf-net] – 1777(ns) average latency – message size: 70 – GC count: 63

[1/Encode/SBE] – 194(ns) average latency – message size: 90 – GC count: 0

[1/Decode/SBE] – 99(ns) average latency – message size: 90 – GC count: 0

[1/Encode/Protobuf-net] – 1441(ns) average latency – message size: 70 – GC count: 0

[1/Decode/Protobuf-net] – 1857(ns) average latency – message size: 70 – GC count: 63

[2/Encode/SBE] – 193(ns) average latency – message size: 90 – GC count: 0

[2/Decode/SBE] – 98(ns) average latency – message size: 90 – GC count: 0

[2/Encode/Protobuf-net] – 1456(ns) average latency – message size: 70 – GC count: 0

[2/Decode/Protobuf-net] – 1794(ns) average latency – message size: 70 – GC count: 63

[3/Encode/SBE] – 189(ns) average latency – message size: 90 – GC count: 0

[3/Decode/SBE] – 97(ns) average latency – message size: 90 – GC count: 0

[3/Encode/Protobuf-net] – 1443(ns) average latency – message size: 70 – GC count: 0

[3/Decode/Protobuf-net] – 1781(ns) average latency – message size: 70 – GC count: 63

[4/Encode/SBE] – 192(ns) average latency – message size: 90 – GC count: 0

[4/Decode/SBE] – 96(ns) average latency – message size: 90 – GC count: 0

[4/Encode/Protobuf-net] – 1424(ns) average latency – message size: 70 – GC count: 0

[4/Decode/Protobuf-net] – 1774(ns) average latency – message size: 70 – GC count: 63

Latency ratio Google Protobuf-net / SBE – Decode: 18.44

Latency ratio Google Protobuf-net / SBE – Encode: 7.51

Btw, what are the plans for handling strings without generating allocations (i.e. no .GetBytes())?

You can read the bytes corresponding to the encoded string without allocation but if you want a string you will have to allocate.

If your system is just passing the string around without actually using it, you can use the byte[].

If you need to call any API which requires a string, you are screwed: the API is forcing you to allocate.

I meant that there are intermdiate allocations purely associated with the serialisation process. Sure the string will already have been allocated but currently there’s a second allocation when we write it to the DirectBuffer (a temporary byte[] returned by GetBytes()).

I raised an issue on github: https://github.com/real-logic/simple-binary-encoding/issues/113

Would you mind putting more details there? I’m looking at the code now and I can’t see any source of allocation when writing a byte[] to DirectBuffer but maybe I’m missing something.

This is the code to write a byte[] to DirectBuffer, maybe you are talking about something else?

public int SetBytes(int index, byte[] src, int offset, int length){

int count = Math.Min(length, _capacity - index);

Marshal.Copy(src, offset, (IntPtr)(_pBuffer + index), count);

return count;

}

Actually, I missed an overload of Encoding.GetBytes() which allows us to provide the byte[] to be written to so we can re-use a temporary buffer. The allocation I was talking about would take place before calling SetBytes().

It would be nice to add a StringPut() method to DirectBuffer itself instead of sometimes accessing the source byte[] directly and sometimes accessing it through DirectBuffer.

I don’t think you would want that on the DirectBuffer itself: anyway you’re not manipulating it directly.

I was thinking maybe generating additional methods on the generated stubs to wrap the logic around encoding and decoding string (the stub knows the encoding to use).

This could wrap this type of code, that you have to write each time for strings (here for decoding):

var length = _frameCodec.GetPackageName(_buffer, 0, _buffer.Length);

var encoding = System.Text.Encoding.GetEncoding(FrameCodec.PackageNameCharacterEncoding);

_irPackageName = encoding.GetString(_buffer, 0, length);

Just to let you know, we just migrated the blog to a new address, feel free to comment there if you have other questions.

The new address: http://weareadaptive.com/blog/